Data processing with Spring Cloud

At Altia, we have designed several programmes for people who are finishing their degrees and recent graduates to start out in the IT sector, bringing their talent and passion for technology. One of these programmes is Hunters: trailblazers who love to follow trends and want to help anticipate future challenges. Being a Hunter means being part of a diverse group that generates and transfers knowledge. We share some of this knowledge through these articles on the latest in tech, like today’s on Spring Data Flow.

Spring Data Flow is a Spring Cloud tool for batch or stream processing of data using scheduled tasks. It is used to deploy a series of microservices on one platform (like Kubernetes) forming a data processing pipeline.

What are the advantages of this technology?

Spring Cloud Data Flow is a platform to create data processing pipelines, developed in Spring Boot under the Spring Cloud platform. Among other things, it includes:

- Local deployments to test pipelines, as a normal or docker-based application.

- Kubernetes cluster cloud-based deployment, either via Helm or as another application. Interaction with Kubernetes to manage the deployment of scheduled tasks, such as CronJobs, using a docker registry as the source of the images to deploy the jobs as containers.

- A web-based dashboard that allows the pipelines to be displayed and managed.

- Integration with OAuth security platforms.

- Integration with CI/CD platforms through REST API.

- Officially, Kubernetes and Cloud Foundry support, but there are community projects that also enable OpenShift, Nomad and Apache Mesos support.

What does the architecture look like?

Spring Cloud Data Flow has two fundamental pillars:

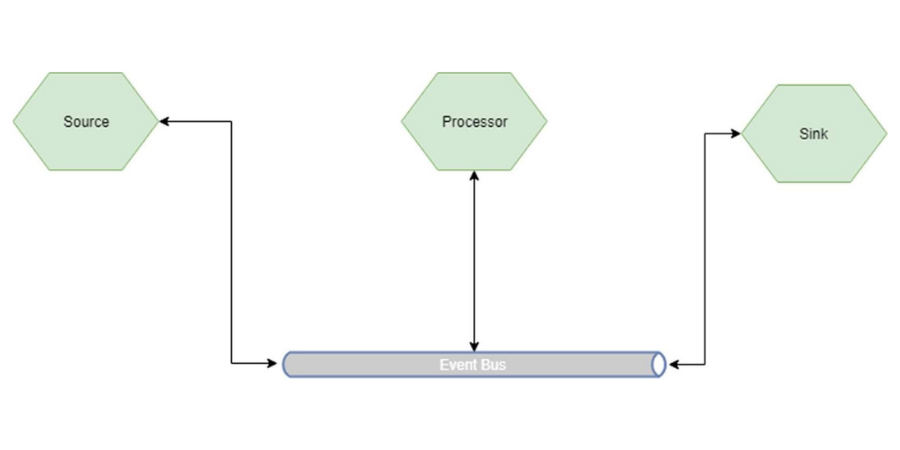

Stream processing: used to run ETL jobs in real time based on task execution within a pipeline where each stage is a separate application which communicates through events (Kafka or Rabbit).

There are three types of nodes:

- Source: reacts to an event and initiates processing.

- Processor: transformations and processes on the event released by the Source.

- Sink: end node that receives data from a Processor and generates final information.

Figure 1: Stream diagram.

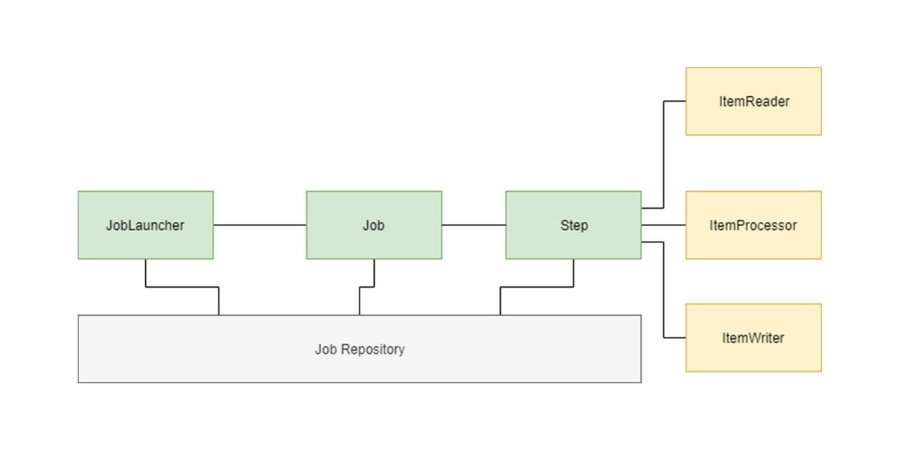

Batch processing: in this case, the microservices generate results for monitoring after being run. These microservices are developed under Spring Cloud Task and Spring Cloud Batch.

There are three basic entities:

- Task: short-lived process running on microservices.

- Job: entity that encapsulates a series of Steps (at least 1) that make up the batch processing.

- Step: domain object that encapsulates a sequential phase within a batch process. Each Step has an ItemReader, ItemProcessor, and ItemWriter.

Figure 2: Batch diagram.

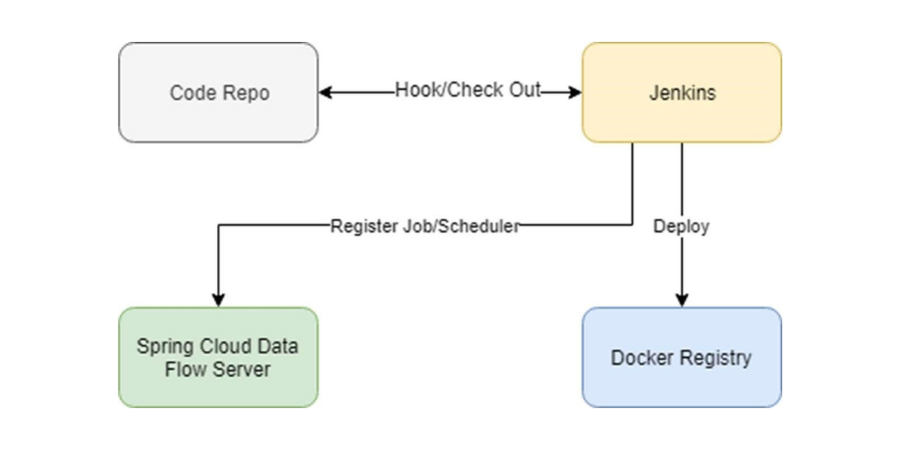

Spring Cloud Data Flow is deployed like any other microservice, although it has permissions to access the platform where it has been deployed, which allows running deployments and job scheduling. This is possible thanks to the Service Provider Interface module, which by default enables connecting to Kubernetes and Cloud Foundry.

Spring Cloud Data Flow allows integration within Continuous Integration and Deployment flows thanks to its REST API. The integration would be like this:

Figure 3: CI/CD deployment.

Want to know more about Hunters?

A Hunter rises to the challenge of trying out new solutions, delivering results that make a difference. Join the Hunters programme and become part of a diverse group that generates and transfers knowledge. Anticipate the digital solutions that will help us grow.

Learn more about Hunters.