In this new article, we will analyze the probability of error, an ingredient related to the term performance. We will also discuss whether we are really talking about intelligence (whatever it means) or just brute force (computing ability).

In our previous post “but what the hell is machine learning?” we presented a few strokes of what artificial intelligence means, guided by a concrete example of application in artificial vision (one of the tasks recognized as intelligent) of a deep convolutional neural network (Imagenet challenge).

However, in this blog we did not get to give a formal definition of machine learning (hereinafter, ML), as we still needed to analyze the probability of error, an ingredient related to the term performance (and an integral part of the formal definition of ML, as we will see later).

Also, in this new blog, we will discuss whether we are really talking about intelligence (whatever it means) or purely brute force (computational capacity).

Probability of error and black-boxes

At this point in the blog, we are clear that the ML branch of artificial intelligence is based on learning a task (artificial vision, translating, playing chess, ...) from thousands of sample data (X, Y) (also called “samples” or “observations”).

The science that learns and draws conclusions from data (samples or observations) is statistics, and statistics is the science of probabilities (or uncertainties). Statistical conclusions are not deterministic: typically conclusions and predictions have a degree of uncertainty (degree of significance).

The ML algorithms, learning from data and how it could not be otherwise, are based “ab initio” on statistics and, therefore, the result of the tasks that an intelligent machine executes is subject to the laws of statistics and, in particular, to the error.

Since error is also a very human feature, the error does not invalidate ML's "smart" feature, but it does differentiate the difference from the more traditional and deterministic algorithms that we are most accustomed to in traditional ML processes software development (based on logical steps "if.. then... ") for which, given a specific input data set, the designer of the "traditional algorithm" can explain in detail the reason for the output.

Today, nobody can explain how VGG19 (the deep convolutional network that we used as an example in our previous blog) is able to distinguish a German Shepherd from a Golden Retriever as a set of logical steps, in the same way that we cannot explain today how we humans do it . This characteristic of some of the ML algorithms, among which are the neural networks , are called “black-box” (black box: my algorithm makes good predictions, but don't ask me the details of how it does it).

The "black-box" property of neural networks also does not invalidate the "smart" property of an ML algorithm, since our own brain is a huge blackbox..

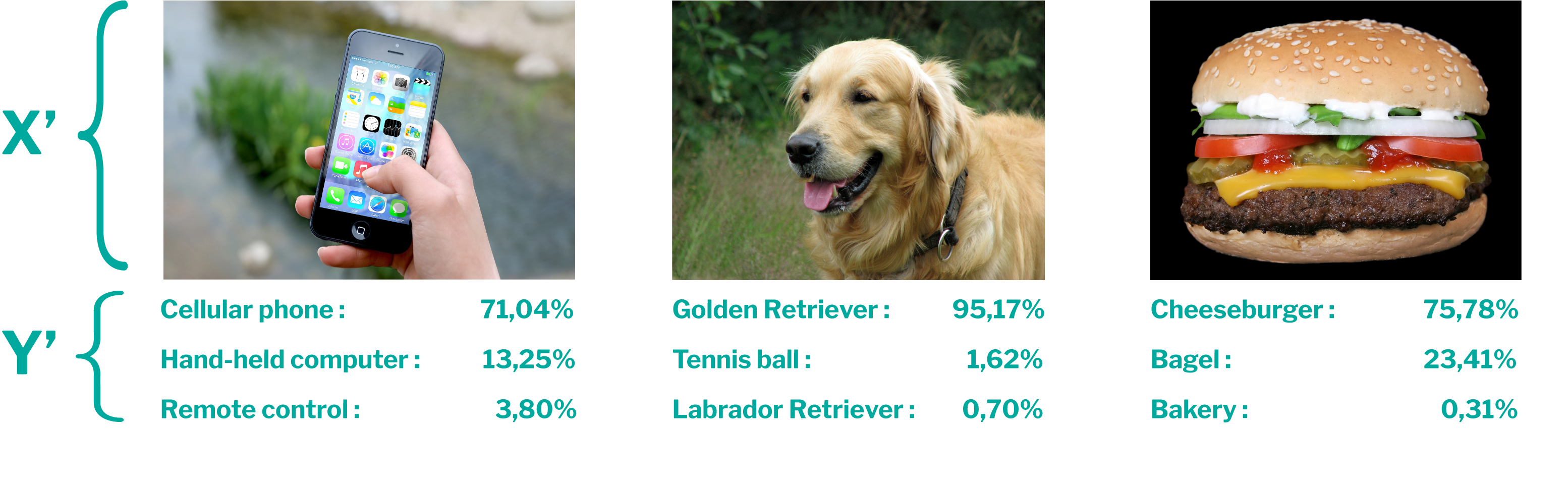

In the following figure, we show again the results, and their certainties (probabilities of Y´), that returned us the intelligent algorithm VGG19 when we provided him three images extracted from Internet (X´) in our previous blog:

As we can see, we have marked for each image X´ three predictions Y´ of the deep convolutional neural network VGG19 with their corresponding three higher probabilities. The most likely are correct, but we have other less likely answers to consider.

Now imagine that I integrate this intelligent algorithm into an application to automatically label Instagram images (for example), which were not labeled by the users themselves who uploaded them, and I make the answer to put on the label by this smart software is the one for which the algorithm gives me the greatest chance of being correct (in the previous example, “mobile”, “Golden Retriever” and “hamburger” respectively). In this case, nothing irreparable would happen if instead of “Golden Retriever” the algorithm returned to me “German Shepherd” (perhaps, quite likely, a complaint from some client).

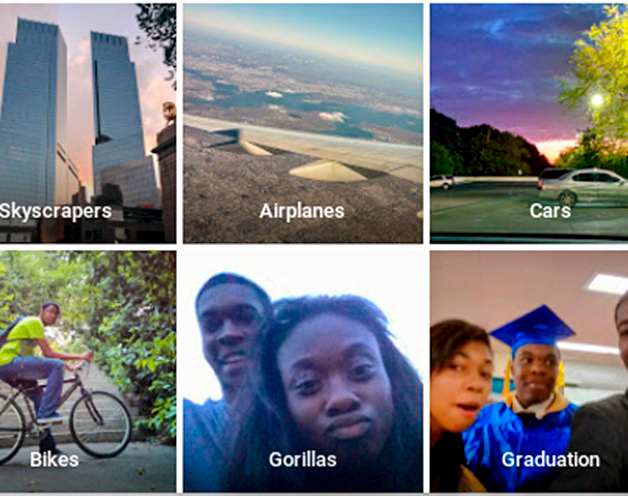

However, there are other situations where these errors are not acceptable. In 2015, news broke that Google's image classifier was (sometimes) labeling people of color as "gorillas" (see image).

Of course, Google immediately disabled its automatic classifier, and apologized. Two years later they it turned it back on but removing from the possible answers “gorilla”, thus the algorithm will never provide Y'=” gorilla” for any X' image and it is impossible for the error to be reproduced (with the side effect that it is also not able to tag photos of real gorillas).

The non-zero probability of error (indeterminacy) in predictions is an inherent property of ML (and, indeed, humans), and restricts its usability in certain problems and/or moments. In examples such as the previous one, it invalidates the solution: we will never have the certainty (100% probability) that a neural network does not label a person as “gorilla” (or as “pizza”), and some errors are unforgivable and invalidate the model (or at least part of it).

Also, in the press, we saw that on March 18, 2018, an autonomous Uber car ran over a woman in Arizona (resulting in death). However, every day thousands of pedestrians around the world are hit by human-driven vehicles. What is the probability that a self-contained car will have an accident compared to the probability that a car driven by a human will have an accident? In my opinion, this is the relevant question to answer before “demonizing” autonomous cars (as happened in March 2018).

I can perfectly imagine a future in which we feel safer if we are transported by autonomous vehicles than by vehicles that we drive ourselves. I can also imagine a future in which we feel more comfortable with a diagnosis and surgery performed by a machine than by a human. We have seen it in science fiction films, but today it is no longer so much science fiction: Hal 9,000 in "2001: an odyssey through space", in its kindest facet, is today called Alexa, Siri, Cortana, ... all based on deep neural networks (artificial intelligence).

Brute force or intelligence?

At this point in the blog, the reader who does not already know the fundamentals of ML will have some added players arriving on stage (IoT, BigData , Industry or Health 4.0, etc.) and understanding why now (availability of (big) data of everything type for learning all kinds of tasks thanks to IoT, storage capacity and massive and parallel processing for learning in a reasonable time thanks to BigData and Moore's Law, etc.). But are we talking about intelligence or brute force?

My math teachers would not have been very impressed in the 1980s that with 140 million adjustable parameters (as VGG19 has) it would theoretically be feasible to solve almost any problem: “Give me many adjustable parameters and will my cat dance the tango ”.

However, we are still far from having the power of our brain. For example, an artificial neural network equivalent only to the V1 cortex of our brain (the only one of the five visual cortexes that relates to our ability to distinguish and identify objects through our sense of sight) would have in the order of 10 billion "adjustable parameters" (a few orders of magnitude greater than the adjustable parameters of VGG19 and any algorithm that exists today). Therefore, if we recognize that human vision is an intelligent activity we cannot rule out that the brute force of our brain is one of the main ingredients of our intelligence, nor to say that the machine is not intelligent (its is only brute force).

On the other hand, after Go game experts brazenly analyzed how a machine (AlphaGo) beat Lee Sedol (world champion) with neural network techniques in 4 out of 5 games, they concluded that AlphaGo employed solutions that human players (including Lee Sedol) had not considered before. Therefore, the machine invented something new that no human had been able to teach him and that was decisive in defeating their champion.

I let the reader draw his own conclusions of whether or not we are talking about intelligence, based on his own intuitive definition of this term.

Machine learning: formal definition

I finish this second blog with a more formal definition of ML, which I hope will not pose a difficult obstacle to overcome.

Tom Mitchel defines: “ML is the field of artificial intelligence that deals with building computer programs that automatically improve with experience . A program is said to learn from experience E with respect to some kind of T-task and P-performance if its performance in performing T-type tasks, as measured by P, improves with experience E"

This definition is applicable to all types of ML (here we have only given examples of a specific type of ML: "supervised" learning and, fundamentally, neural networks).

If now the reader who has reached this point in the blog replaced (for example Imagenet reference have been used in these blogs): it is only brute force). will finish reading this blog understanding one of the formal definitions most commonly used in the literature of Machine Learning.

Its application to other T tasks (”making a diagnosis“,” translating a text“,...), other starting data for learning (X,Y) (”analytical and diagnostic“,” original text and translated text“...) and other performance measurements P (”probability of diagnoses and incorrect“,” probability of phrases and mistranslated",...) are immediate.

Pablo Méndez Llatas, Project Manager